Google recently reported pretty high robots.txt error rate against this site. And as an outcome of that, site dropped rankings for almost 50% on Google search result pages. I had no idea what happened until I found it may have something to do with CloudFlare.

Google sent me a message about robots.txt error rate on 8th November 2012. It said my robots.txt error rate is 15.6% and they’ve postponed crawl due to that. I didn’t take it seriously as sudden issue like this is common with servers. It could be because of temporary network issue on data center or Google itself having problems with their network (very unlikely). So I ignored it. I’m not a guy how open Webmaster Tools every day (I should). After that Google had sent me 3 more messages about the same error with higher error rate on each message. I got lazy and didn’t took it seriously until I noticed a traffic drop. It looks like Google has penalized me. This was the most reason message (28th Nov 2012) I received from Google about this.

Over the last 24 hours, Googlebot encountered 150 errors while attempting to access your robots.txt. To ensure that we didn’t crawl any pages listed in that file, we postponed our crawl. Your site’s overall robots.txt error rate is 72.5%.

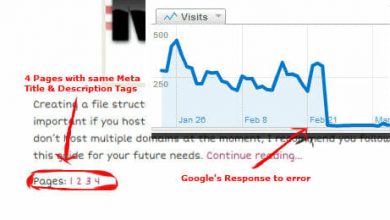

As you can see from the image my traffic drop was caused on 21st Nov 2012, the same day Google sent me 3rd message about the issue. Search queries dropped almost by 50%. Then I got panicked and started working on fixing it. To fix it I needed to find what’s causing it. My robots.txt file was working fine by that time and all the time. I couldn’t understand what went wrong.

As you can see from the image my traffic drop was caused on 21st Nov 2012, the same day Google sent me 3rd message about the issue. Search queries dropped almost by 50%. Then I got panicked and started working on fixing it. To fix it I needed to find what’s causing it. My robots.txt file was working fine by that time and all the time. I couldn’t understand what went wrong.

I researched online for few hours and studied similar cases. A support page from Google said that they don’t care if there’s no robots.txt file at all. But if it’s there it must be accessible to Googlebot. Simply deleting the file may fix the problem. But I had some directories that I want to block from getting indexed. However I understood why Google showing these errors, they can’t access the file. Googlebot sees the file but it can’t read the file. Something is blocking it from reading it. It could be a redirect or a timeout. I used fetch as Googlebot tool several times to find if it’s showing something. But it returned “Ëœsuccess’ every time I tried. I was hopeless.

When you don’t know what’s happening, best place to look is the server error log. I use Nginx as web server. So I went ahead and opened Nginx error log. There was nothing there. File was empty. That can’t be happening. There must be something on it. Then I remembered that I’m using CloudFlare. So all this has something to do with CloudFlare. I’m using CloudFlare as a free user and only started using it one month ago.

I did a quick Google search on CloudFlare and robot.txt and found much related article by Andrew Girdwood with title “ËœCloudflare’s outages will harm your SEO‘. But the issue he was talking about caused due to a router fail and has been fixed or something. But I was getting these errors every day for more than 20 days. It’s certainly not a router fail. So I needed to find more evidence before I put blame on CloudFlare. I went back to Google webmaster tools and check out when Google started to see errors. As you can see on below image, It was 7th Nov 2012.

Then I checked when I started using CloudFlare services. It was 6th Nov 2012. So it’s definitely causing by CloudFlare. I don’t understand how. I can access robots.txt file, fetch as Google tool can access it. But Googlebot can’t access it when crawling. I suspect this has something to do with CloudFlare’s spam filters. May be CloudFlare show a CAPTCHA to Googlebot when accessing robots.txt file. I have disabled CloudFlare and updated my nameservers immediately. Hopefully CloudFlare pay attention to this issue and solve it quickly so I can start using their services again.

Hi, First, thanks for sharing. I’m having the same issue with a website that I just launched and couldn’t find the root issue. After reading your article, I will take a look at my cloudflare account. Interesting enough, I have another website running on the same server as an add-on domain that is being indexed just fine (and is using cloudflare as well). I wonder, what are your thoughts on the dual behaviour?